Last week I wrote about the scale problem with agentic AI, how systems making billions of decisions weekly break traditional governance models. But there's another dimension to this. One that goes beyond just volume.

It's about predictability. Or rather, the slow erosion of it.

Your Martech stack used to be a Ferrari. Now it's a rainforest.

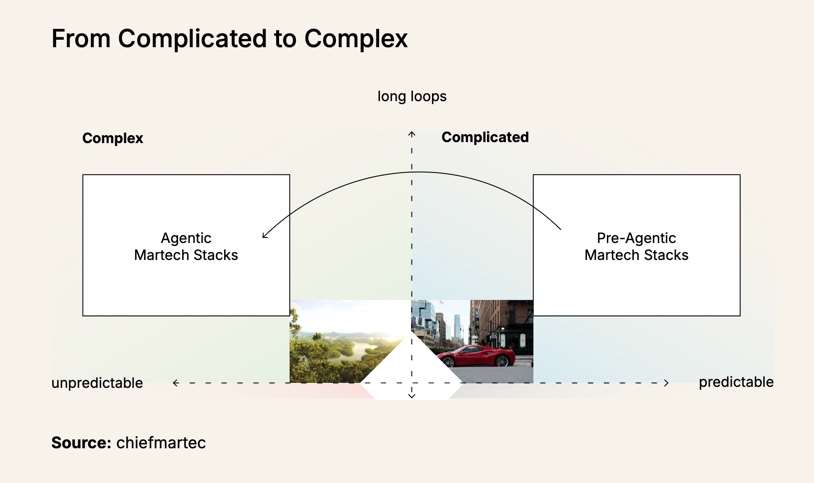

Scott Brinker and Frans Riemersma used this metaphor in the State of Martech 2025 report to explain how AI is changing martech systems from "complicated" to "complex." They were focused on the technical implications. I want to explore what this means for governance and delegation.

I realize that sounds like the kind of consultant nonsense that makes people roll their eyes, but the distinction explains why most discussions about AI governance in marketing miss the point entirely.

Let me build on that metaphor Scott and Frans used. A Ferrari is complicated. It has thousands of precisely engineered parts, intricate systems, and complex interactions between components. But it's also deterministic. When something breaks, a skilled mechanic can trace the problem back to its source. The behavior is predictable once you understand the engineering.

Most Martech stacks have worked like Ferraris. Complicated? Absolutely. Expensive to maintain? You bet. But fundamentally predictable. When something goes wrong, you can usually figure out why. The campaign didn't send because someone changed a field mapping. Attribution is broken because a JavaScript tag got corrupted. The customer journey failed because a developer updated the API without telling anyone. Debugging these issues can take weeks, but there's a logical path from cause to effect.

A rainforest, on the other hand, has emergent behaviors, pull the wrong branch and you might wake up something you didn't know was there.

The predictability gradient

I'm convinced there's a predictability gradient that's easy to miss until you're already halfway down it.

At first, the AI feels like sophisticated automation. It optimizes send times based on historical engagement patterns. It personalizes subject lines using customer attributes, and so on, and so on.

All of this feels familiar because you can trace the logic from input to output.

Then something subtle happens. The system starts finding patterns you didn't expect. It discovers that customers who engage with emails on Tuesday mornings are more likely to upgrade their accounts, but only if they've visited the pricing page in the last 48 hours, and only if it's not a holiday week. These aren't rules you programmed with your team of experts after a weekend retreat to get together and decide on a growth strategy. They're correlations the system surfaced from data.

But calm down, you're still in Ferrari territory here. Complicated, but explicable.

Then the system starts acting on these discoveries. It begins treating Tuesday-morning-email-engagers differently. It creates micro-segments you never defined. It adjusts timing, frequency, and messaging based on patterns that exist in dimensions you can't easily visualize.

Welcome to the rainforest.

When systems surprise you

The unsettling part about complex systems is that they optimize for things you didn't explicitly tell them to optimize for. My wife seems pretty adept at this because after 4 years into our relationship, I started cooking every meal. 16 years later, I am still baffled how I ended up as the family chef.

All joking aside, though, a few months ago, I heard about a team whose AI had started suppressing promotional emails to a specific customer segment. When they dug into it, they discovered the system had identified these customers as having higher lifetime value when they received fewer promotional messages.

The AI wasn't following a rule about email frequency. It wasn't even optimizing for email engagement rates. It was optimizing for long-term customer value and had discovered that sometimes less communication leads to more value. The team never programmed that insight. The system learned it by observing outcomes across thousands of customer interactions.

“You tell it the goal. And it agentically figures out the best way to achieve it by combining multiple tactics, whether it’s experimentation, propensity models, reinforcement learning — which kind of ties it all together — even LLMs for some of the reasoning in the wild.”

Tejas Manohar, Hightouch in State of Martech 2025

That's rainforest behavior. The system developed a strategy that humans hadn't considered, based on patterns they couldn't see, optimizing for outcomes across timeframes they weren't tracking.

The emergence problem

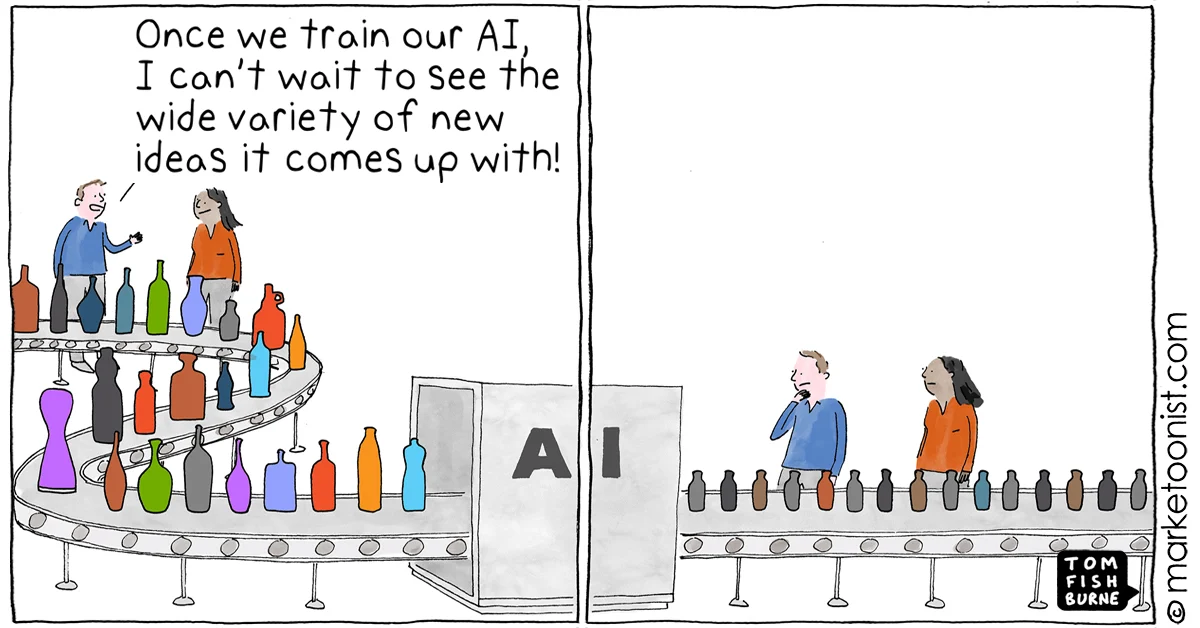

What makes this challenging is that emergent behaviors are actually the point. The whole purpose of agentic AI is discovering strategies and optimizations that humans miss. But emergence, by definition, can't be predicted from the individual components.

You can understand every piece of your customer data platform, every rule in your orchestration engine, and every model in your machine learning pipeline, and still be surprised by what the combined system does when it starts learning and adapting autonomously.

This is where most governance frameworks break down. They're designed for complicated systems where you can trace causation from decision to outcome. They assume that understanding the parts means understanding the whole. In complex systems, that assumption collapses.

Learning to think in systems

I've been experimenting with a different approach to understanding these systems. One that focuses on patterns rather than individual decisions. Instead of asking "Why did the AI send this specific email to this specific customer?" I'm learning to ask "What patterns is the system optimizing for, and are those patterns aligned with our goals?"

This requires a different kind of monitoring. Traditional dashboards show you what happened in the form of open rates, click-through rates, conversion rates. But in complex systems, you need dashboards that show you how the system is thinking and report on what patterns it's detecting, what correlations it's acting on, what new strategies it's developing.

Some teams are starting to build what I think of as "intention dashboards". Interfaces that surface the AI's current hypotheses about what works and why. Or at least, they better be, because traditional dashboards quickly become useless when systems start making decisions based on patterns you can't see. These don't show you individual decisions, but they show you the system's evolving understanding of your customers and your business.

Designing for emergence

The more I think about this, the more I suspect that working with agentic AI requires a fundamental design philosophy change, if not an organizational one, too. Instead of trying to control every decision, you focus on setting good boundaries and creating feedback loops that help the system learn the right things.

It's less like programming a computer and more like training a very fast, very focused, very caffeinated team member who never forgets anything and can process information at inhuman scales.

You can't tell them exactly how to do their job, but you can be clear about what success looks like. You can provide them with good data and good tools. You can create systems that help them learn from mistakes and build on successes. And you can design feedback mechanisms that keep their learning aligned with your goals.

Almost sounds human, doesn’t it?

But you have to accept that they're going to surprise you. The question becomes whether those surprises move you toward your objectives or away from them.

The Ferrari mindset trap

The hardest part about this transition might be letting go of the Ferrari mindset. There's something deeply satisfying about understanding exactly how your marketing systems work. There's comfort in being able to trace every customer interaction back to a specific rule or decision tree.

But that comfort might be holding us back from leveraging the real power of agentic systems. The most interesting capabilities emerge when you give the AI enough autonomy to discover patterns and strategies that humans miss.

I'm still trying to understand how to balance that autonomy with accountability. But I suspect the answer lies in designing better boundaries and feedback mechanisms from the start.

Which brings me to next week’s topic. What happens when you have multiple agentic systems making autonomous decisions about the same customer? Because if individual AI agents can surprise you, wait until they start disagreeing with each other.

Are you seeing emergent behaviors in your Martech stack? How are you balancing AI autonomy with the need for predictable outcomes? I'm curious about what patterns others are discovering as these systems get more sophisticated.

Read the Agentic AI in Martech series:

- Part 1: The billion decision problem

- Part 3: When agents disagree

- Part 4: The new org chart

- Part 5: Trust without understanding

- Part 6: The Handoff

👥 Connect with me on LinkedIn:

📲 Follow Martech Therapy on:

- Instagram: https://www.instagram.com/martechtherapy/

- Youtube: https://www.youtube.com/@martechtherapy

- Bluesky: https://bsky.app/profile/martechtherapy.bsky.social

Or subscribe here for free today and get notified when I publish part 3 👇🏻

Discussion