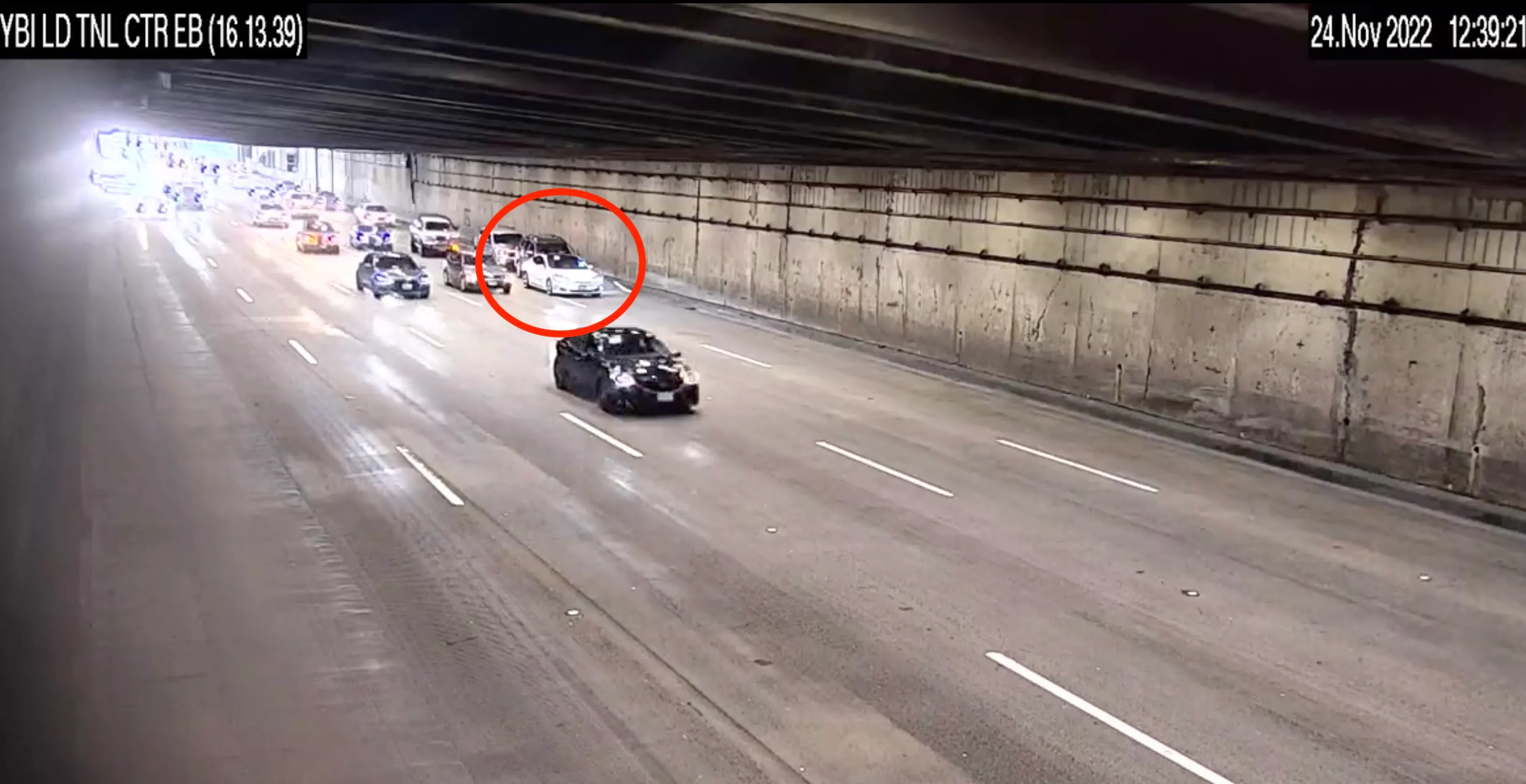

My Tesla occasionally does something that makes me question everything I think I know about driving. Since I have owned it, there have been numerous times when it suddenly slammed on the brakes while on autopilot, no visible obstacles, no construction signs, perfect weather. Just phantom braking, the phenomenon that’s been getting Tesla scrutinized by government agencies.

My wife hates when this happens. She’s made it very clear that I shouldn’t rely on autopilot when the family is in the car, both for safety reasons and because she’d prefer I not sleep on the couch.

This captures something fundamental about working with agentic AI systems. Although we often discuss them positively, what we need to acknowledge is that sometimes they make decisions that seem wrong and actually are wrong, but you can’t predict when. The challenge isn’t just understanding those decisions, it’s designing for trust in systems that operate beyond your comprehension and occasionally betray it in unpredictable ways.

This is something I started exploring in the Contextual CDPs series. There, trust was framed as something built not through control, but through contingency. The idea that you could design a system where trust doesn’t mean “knowing exactly why” but “knowing when to intervene, and when not to.” That framing is becoming even more relevant now.

Explainability as a Service

Most discussions about AI governance start with explainability. We want systems that can tell us why they made specific decisions, show us their reasoning, and walk us through their logic step by step.

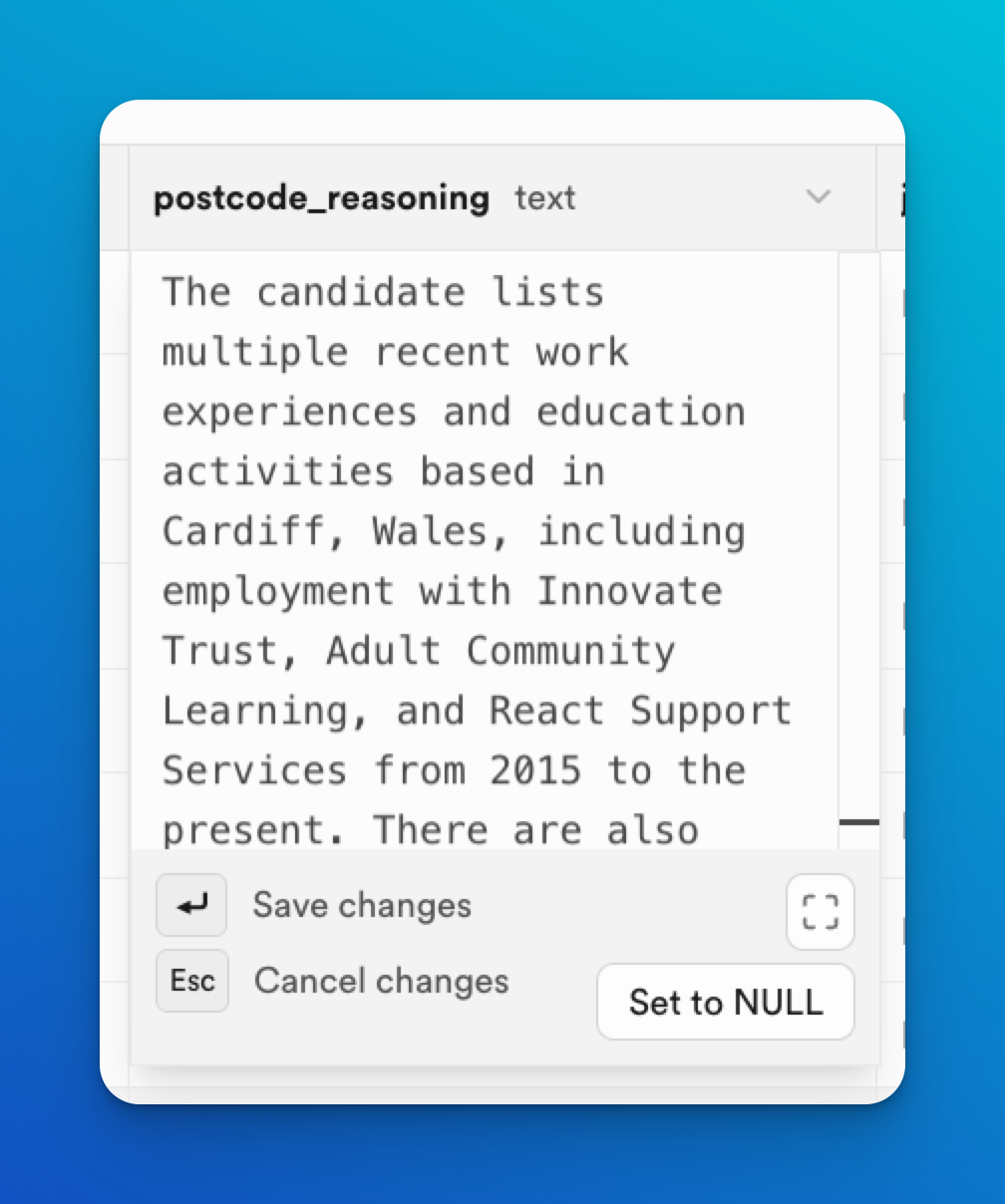

This makes intuitive sense for simple AI applications. If your lead scoring model gives someone a high rating, you want to know it’s because they visited your pricing page three times and downloaded two whitepapers, not because their email address contains the letter ‘Q’.

But explainability breaks down once systems become agentic. The logic behind any individual decision might be distributed across thousands of micro-updates, model weights, and cross-context correlations. As I argued in the Trust as a feature post from the Contextual CDP series, the more expressive a system becomes, the harder it gets to map one input to one output in a way that makes human sense. You don’t get a flowchart. You get a shadow.

Building trust through outcomes

So I’ve been shifting the trust question. Instead of asking why the AI made a specific choice, I ask whether those choices, over time, drive outcomes I care about. Trust, in that sense, becomes pattern-based.

You’re no longer judging individual decisions. You’re watching the system’s trajectory. Does it consistently learn in a direction that aligns with your goals? Does it adapt in ways that make your orchestration better, not weirder?

This is a big change from traditional governance and it is a pattern we see starting to emerge in tools like Hightouch’s AI Decisioning, and Aampe’s core offering. It requires moving beyond reports that show “what happened” to observability models that detect how the system is evolving. It’s about observing learning.

Confidence calibration problem

Like many salespeople, agentic systems love being confident. Sometimes, weirdly so.

An AI might be 97% sure that sending a promotional email at 3:47 AM on a Tuesday is optimal for a customer, based on dozens of variables and millions of interactions. But, like my Tesla’s phantom braking problem, that confidence might rest on a spurious correlation involving nothing more than timezone anomalies and a broken event tag.

That’s why outcome monitoring has to include calibration. Is the system as good at knowing when it’s right as it is at being right? In other words, does confidence correlate with correctness?

If not, trust can’t scale. You’re not building a system, you’re betting on a gambler.

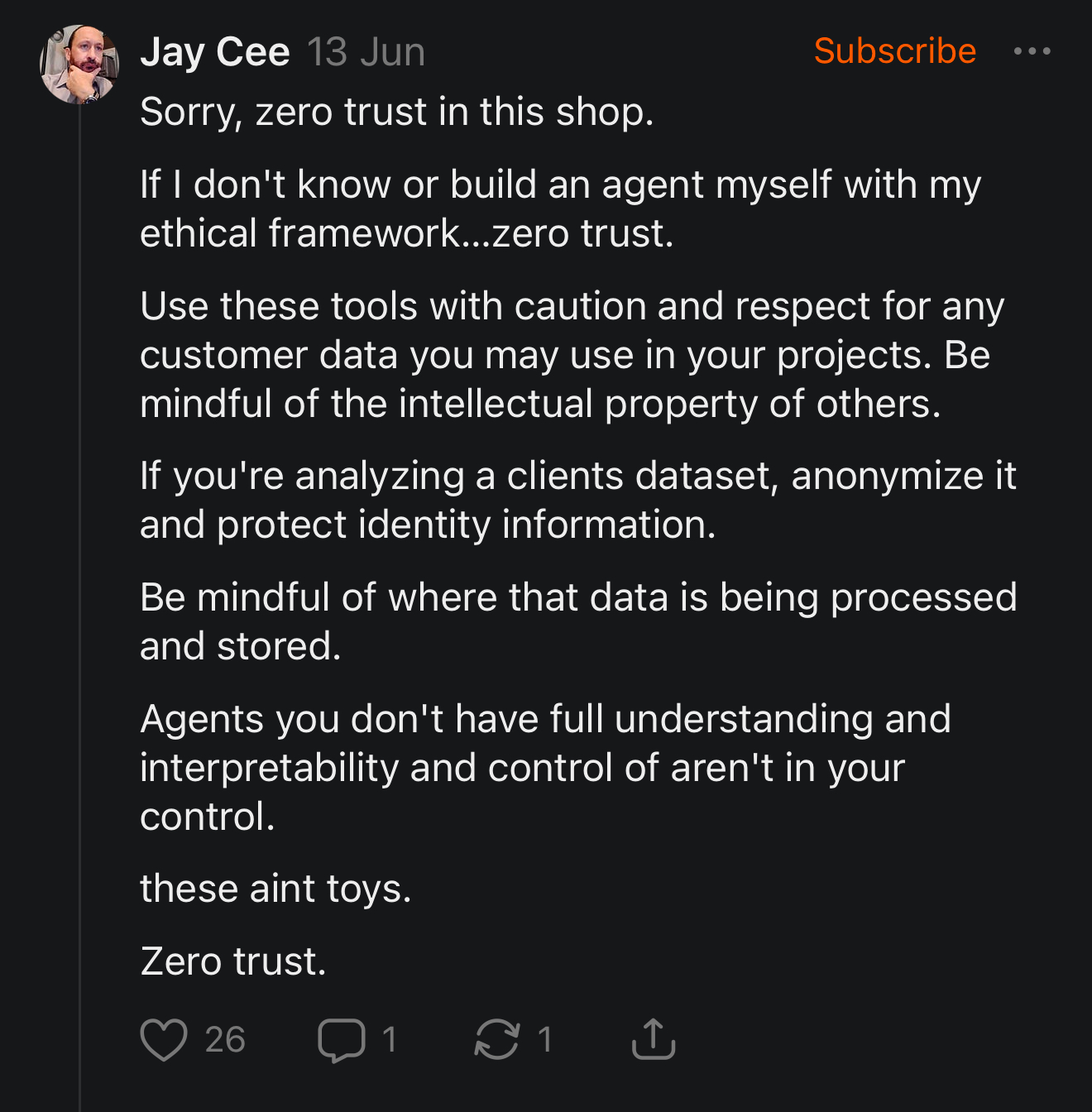

Designing trust boundaries

In the Letting Go of Control article, I introduced the idea of delegation design. You don’t trust an agent by default, you trust it within bounds. These bounds aren’t static rules but operational guardrails, shaped by context, stakes, and reversibility.

Some are obvious, such as don’t send more than X emails, don’t discount more than Y percent. But others are more dynamic. Something along the lines of escalate if recent support tickets exist, override if confidence drops below a threshold, or route to human judgment when a decision breaks from historical precedent.

Agentic AI demands a new kind of policy architecture, what Paul Meinshausen and I discussed as meta-logic: rules about when rules need to be negotiated. Think more like air traffic control, less like campaign workflows.

Trust through reversibility

I’ve found one of the best proxies for safe autonomy is reversibility. If a decision can be undone quickly with minimal impact, you can afford to let agents experiment. If not, you need to contain or delay that autonomy.

This principle, trust the system more when failure is cheap, has quietly been a guiding force in agentic deployments. You start with email timing, not price modeling. You scale from button color to bundling logic. It’s about aligning trust with stakes.

Reversibility is organizational. Who owns the override? How fast can recovery happen? What signals trigger rollback?

The learning partnership model

This brings me back to the idea of co-evolution. In the Contextual CDPs series, I described a trust model where AI systems and human teams evolve together. The AI detects subtle patterns. Humans evaluate their usefulness. Feedback flows in both directions.

Paul put it this way:

Agentic systems don’t eliminate business logic, they expand it.

They move you from rule-based control to pattern-based influence. That means trust becomes less about authority and more about adaptation.

But adaptation only works when both sides are learning. AI can’t just execute. Humans can’t just override. The loop is the thing.

Accepting productive uncertainty

The more I work with agentic systems, the more I realize that perfect understanding isn’t the goal. What matters is productive uncertainty, systems that surprise you in useful ways, not just weird ones.

That’s where the trust architecture really changes. You stop treating explainability as a requirement and start treating responsiveness as a capability. You design for reversibility. You monitor for pattern drift. You establish feedback rituals that evolve alongside the system.

It’s about designing environments where new logic can emerge, safely, directionally, and occasionally insightfully.

Even if it means braking for something you can’t see.

Read the Agentic AI in Martech series:

- Part 1: The billion decision problem

- Part 2: From complicated to complex

- Part 3: When agents disagree

- Part 4: The new org chart

- Part 6: The Handoff

👥 Connect with me on LinkedIn:

📲 Follow Martech Therapy on:

- Instagram: https://www.instagram.com/martechtherapy/

- Youtube: https://www.youtube.com/@martechtherapy

- Bluesky: https://bsky.app/profile/martechtherapy.bsky.social

Thanks for reading Martech Therapy! Subscribe for free to receive new posts and support my work.

Discussion