Picture this scenario: your personalization AI decides that Sarah (Conner? 😅), a loyal customer, should receive a premium upgrade offer because her engagement scores have been climbing. Simultaneously, your churn prevention AI flags Sarah as a retention risk and recommends a discount promotion to keep her engaged. Meanwhile, your email frequency optimization AI determines Sarah is approaching communication fatigue and suggests suppressing all promotional messages for the next week.

Three different systems, three different conclusions, one very confused customer experience.

Thanks for reading Martech Therapy! Subscribe for free to receive new posts and support my work.

This is the multi-agent conflict problem, and I'm convinced it's going to become one of the defining challenges of agentic AI in Martech. When individual systems start making autonomous decisions, conflicts become inevitable. The question becomes: who wins?

The democracy problem

Traditional marketing automation avoided this issue through hierarchy. You had one system making decisions, or you had clear rules about which system took precedence. Email marketing trumped display advertising. Retention offers override acquisition campaigns. Simple.

But agentic systems complicate this hierarchy because they're all optimizing for outcomes, sometimes across different timeframes and different definitions of success. Your personalization AI might optimize for immediate engagement. Your lifetime value AI optimizes for long-term revenue. Your brand sentiment AI optimizes for customer satisfaction.

When these systems disagree, you can't just flip a coin or fall back on "first come, first served." Each agent has reasoning behind its recommendation, often based on patterns the others haven't considered.

Speed vs. Deliberation

Here's where the scale problem from Part 1 resurfaces. These conflicts happen at machine speed across millions of customers simultaneously. You can't exactly call a meeting to hash out whether Sarah should get the upgrade offer or the retention discount.

I've been thinking about this in terms of conflict resolution architectures. Some teams are experimenting with hierarchy-based systems where certain agents get priority in specific scenarios. Others are building consensus mechanisms where agents essentially vote on the best action.

But both approaches feel clunky when you consider that these systems are processing decisions at the 15-200 billion per week scale we discussed earlier. Democracy might work for humans, although the current political climate begs to differ, but it doesn't scale well when participants are making thousands of decisions per second.

The context coordination challenge

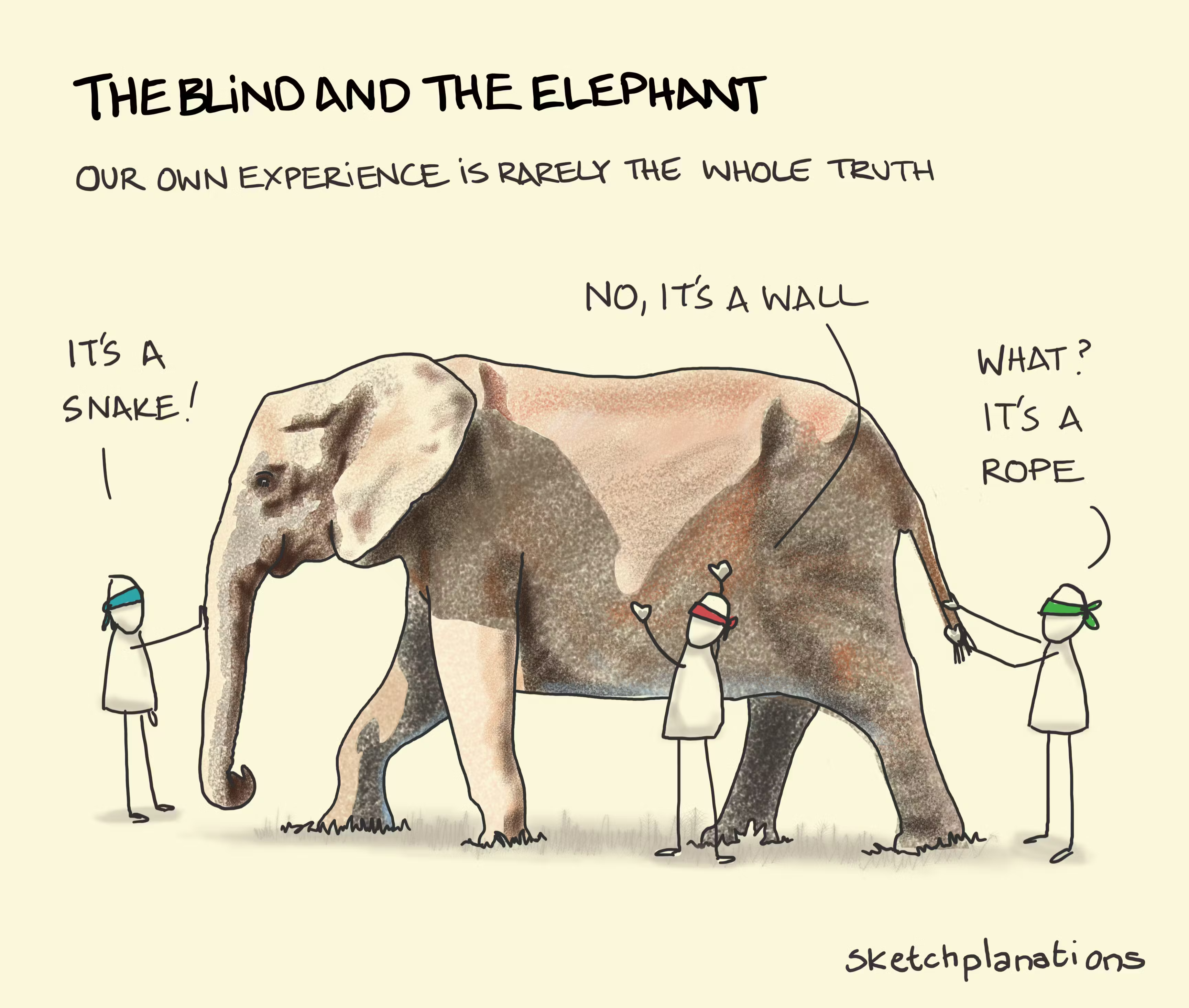

The deeper issue is that each agent operates with its own view of the customer context. Your personalization AI knows about browsing behavior and engagement patterns. Your churn prevention AI focuses on satisfaction scores and support interactions. You catch my drift.

They're all looking at the same customer through different lenses, optimizing for different outcomes, working with different data priorities. In isolation, each agent's recommendation makes perfect sense. The problem emerges when they need to coordinate.

This reminds me of the parable about blind people describing an elephant. Each person touches a different part and comes away with a completely different understanding of what they're dealing with. The trunk feels like a snake, the leg feels like a tree, the ear feels like a fan. They're all correct within their limited context, but none has the complete picture.

Designing conflict resolution

I believe the most interesting work happening right now involves teams designing conflict resolution protocols that can operate at machine speed while preserving the intelligence of individual agents.

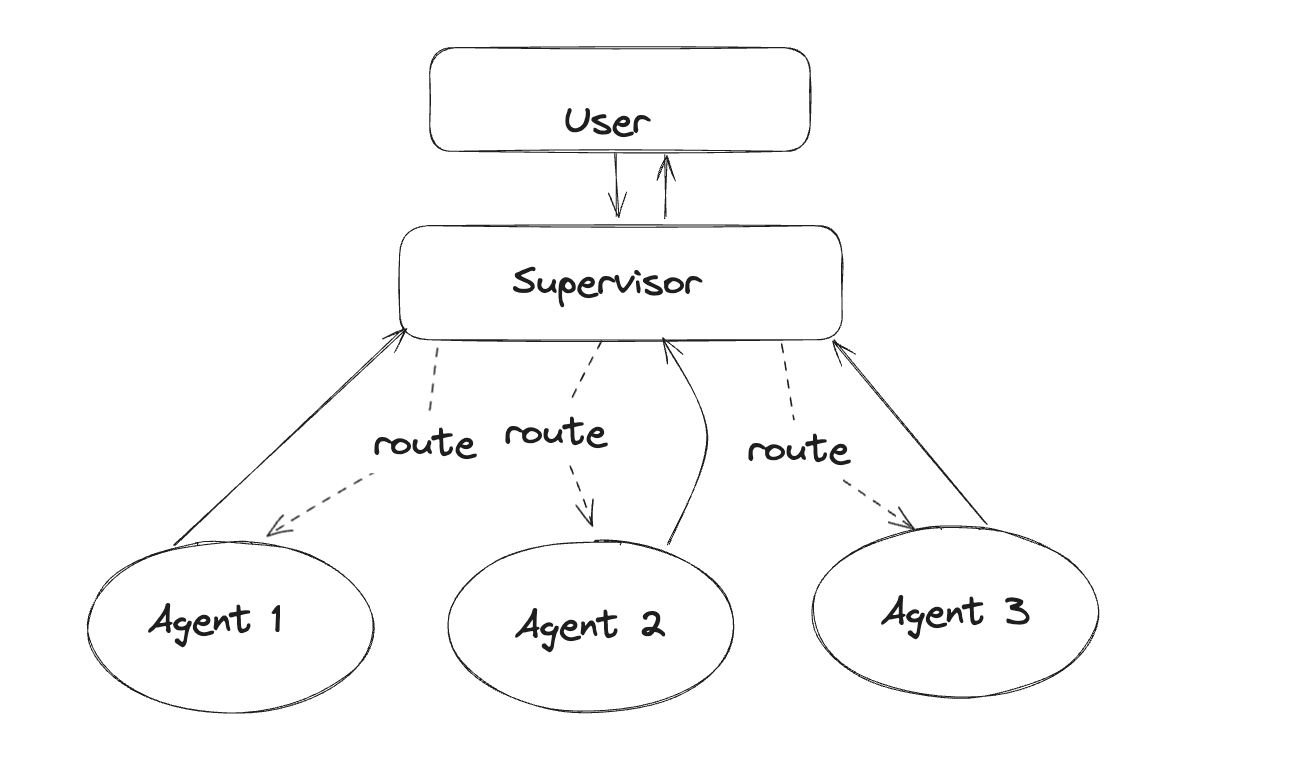

One pattern I've heard about involves creating meta-agents whose job is specifically to arbitrate between other agents. These arbitration systems don't make marketing decisions themselves. They just resolve conflicts between systems that do. Think of them as extremely fast, extremely specialized mediators.

Another approach involves contextual hierarchies that change based on customer state. If someone just submitted a support ticket, the customer satisfaction agent gets priority. If they're in an active purchase cycle, the personalization agent takes precedence. The hierarchy adapts to the situation rather than remaining fixed.

But perhaps the most elegant solution I've encountered is what some teams call "collaborative optimization." Instead of having agents compete for control, they're designed to jointly optimize for combined outcomes. The personalization AI shares its engagement predictions with the churn prevention AI, which factors them into its retention strategies. The frequency optimization AI provides fatigue scores that influence both personalization and retention timing.

The human arbitration fallback

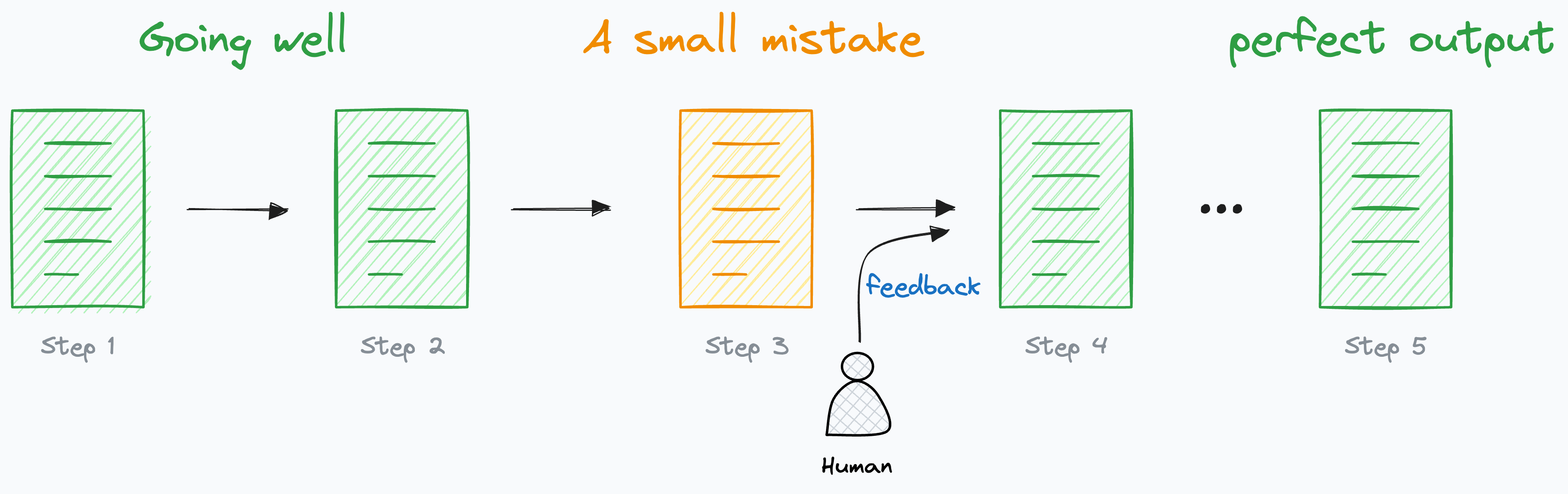

Of course, there's always the option to escalate conflicts to human decision-makers. But remember our math from Part 1, if your systems are making billions of decisions weekly, human arbitration becomes a bottleneck that defeats the purpose of autonomous systems.

Some teams are experimenting with selective escalation, where only conflicts above certain thresholds or involving high-value customers get human review. Others are building learning systems that capture human arbitration decisions and use them to train automatic conflict resolution.

The unexpected benefit of agent conflicts is that they often reveal business logic you didn't know you had. When your personalization AI and your brand consistency AI disagree about message tone, it forces you to clarify what your brand voice actually means in practice.

These conflicts surface assumptions and priorities that were previously implicit. Do you optimize for immediate revenue or long-term satisfaction? Do you prioritize engagement or respect for customer preferences? Do you value personalization or consistency?

Agentic systems make these trade-offs explicit because they force you to design clear conflict resolution rules. In a way, building multi-agent systems becomes an exercise in clarifying your own business philosophy.

Tomorrow's orchestration

I suspect we're moving toward a future where marketing orchestration looks less like a single conductor leading an orchestra and more like a jazz ensemble where individual musicians improvise within agreed-upon structures. The agents have autonomy to make decisions within their domains, but they're also listening to each other and adapting their actions to create coherent customer experiences.

This requires a different kind of system design. One that emphasizes coordination and communication between agents rather than centralized control. It also requires new kinds of monitoring and debugging tools, because understanding what went wrong becomes much more complex when multiple autonomous systems are involved.

The teams that figure this out first are going to have a significant advantage. But it's going to require rethinking some fundamental assumptions about how marketing technology should work.

Next week… what happens to human roles when AI handles most of the execution? Because if agents are making the decisions and resolving their own conflicts, what exactly are the humans supposed to be doing?

Have you encountered conflicts between different AI systems in your stack? How are you handling coordination between autonomous agents? I'm particularly curious about escalation protocols and where human judgment still feels essential.

Read the Agentic AI in Martech series:

- Part 1: The billion decision problem

- Part 2: From complicated to complex

- Part 4: The new org chart

- Part 5: Trust without understanding

- Part 6: The Handoff

👥 Connect with me on LinkedIn:

📲 Follow Martech Therapy on:

- Instagram: https://www.instagram.com/martechtherapy/

- Youtube: https://www.youtube.com/@martechtherapy

- Bluesky: https://bsky.app/profile/martechtherapy.bsky.social

Thanks for reading Martech Therapy! Subscribe for free to receive new posts and support my work.

Discussion